When we talk about scaling we can be running a program on multiple machine, storing huge data, managing the set of resources we are using to serve thousands users.

Why scaling?

Well for serving more requests concurrently and faster, to store more data (your data may not fit in one machine or database), and to be more resilient to failure for example replicating your database or your running program.

What we do scale?

Different sites may have different needs, for example youtube may need for more bandwidth, for sites that do lot of computations that it needs more computers (caching data in memory, CPU). For big data centers, power is an impoprtant concern. Other web sites may require big amount of storage space like facebook where users upload their contents (e.g. photos).

Techniques (approaches) for scaling:

Optimize code (how to program better) vs. adding more machines.

Another techniques is caching complex operations

Upgrade machines, each year new faster computer (more memory, disk space, faster CPU) appears in the market

Adding more machine may be a solution for scaling Web application.

An example, a database read query db_read() costs 100ms, if you run multiple time read operation on database the cost may grow.

A cache can be implemented in a simple way with a hashtable, here is the Caching algorithm:

Why scaling?

Well for serving more requests concurrently and faster, to store more data (your data may not fit in one machine or database), and to be more resilient to failure for example replicating your database or your running program.

What we do scale?

Different sites may have different needs, for example youtube may need for more bandwidth, for sites that do lot of computations that it needs more computers (caching data in memory, CPU). For big data centers, power is an impoprtant concern. Other web sites may require big amount of storage space like facebook where users upload their contents (e.g. photos).

Techniques (approaches) for scaling:

Optimize code (how to program better) vs. adding more machines.

Another techniques is caching complex operations

Upgrade machines, each year new faster computer (more memory, disk space, faster CPU) appears in the market

Adding more machine may be a solution for scaling Web application.

Caching

Caching refers to storing the result of an operation so that future requests return faster, it should be performed in following cases:- Computation is slow

- Computation will run multiple times

- When the output is the same for a particular input

- You hosting provider charges for db access

An example, a database read query db_read() costs 100ms, if you run multiple time read operation on database the cost may grow.

A cache can be implemented in a simple way with a hashtable, here is the Caching algorithm:

if request in cache: //cache hit return cache[request] else: //cache miss r = db_read() cache[request] = r return r

Real example (ASCII Chan) for scaling

When a web application (e.g. ASCII Chan) receives a user request, it first has to process it, query the database to retrieve data, collate and prepare the result to send back to user, and finally render HTML that will represent the web app response to user reuqest.

In this case, it is the database queries (in some case, web app don't use database) that should be optimized for improving the web application performance.

Query optimization

Optimizing the query can be by using index and simplifying queries. Although Google App does already for us.

To avoid cache staling, the cache need to be cleared (when executing the next read the cache will receive new updated data) or updated after storing new data.

Before caching we had one DB read per page view which is very bad as most uers are reading web site content. After using cache, we had one DB read per submission which is good.

Cache Stampede occurs when multiple cache misses create too much load on the database. For example, after a submission to the database, the cache get cleared. Then when multiple read requests arrive they will hit the database at the same time running multiple queries as the cache is empty.

Memcached

The use of memcached library provided by Google App Engine makes the Web application cache survives to restarts. Also, makes the app stateless which is key for scaling as no state is hold by the app but in cookies, DB or memcached.

A stateless app means no state is kept between requests, apps become interchangeable, and adding new apps is easy. Also, apps can be scaled independent of cache and DB.

Cache systems can also be used for caching the HTML that will be rendered to user, another possibility to improve all three other parts of the request would be adding additional app servers.

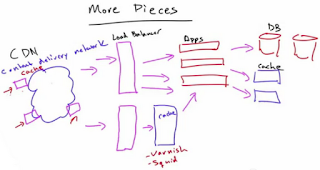

When adding more server (that may be hosted in different machine) we need to consider how to route/spread requests to these multiple servers. This can be done with a load balancer, a dedicated machine optimized for spread requests, it will have a list of all the app servers that are available, and decides which of these the requests from users should be directed to. Example of load balancers are HAProxy (this one is used by reddit) and ZXTM.

There are several algorithms for load balancing that can be used to choose which server to send a request to:

App Server Scaling

After taking a fair amount of load (which is mainly due to read operations) off the database with the use of cache systems, it is also important to consider improving the performance of other steps involved in a request:- Process the request

- Query the database

- Collate the results

- Render HTML

Cache systems can also be used for caching the HTML that will be rendered to user, another possibility to improve all three other parts of the request would be adding additional app servers.

When adding more server (that may be hosted in different machine) we need to consider how to route/spread requests to these multiple servers. This can be done with a load balancer, a dedicated machine optimized for spread requests, it will have a list of all the app servers that are available, and decides which of these the requests from users should be directed to. Example of load balancers are HAProxy (this one is used by reddit) and ZXTM.

There are several algorithms for load balancing that can be used to choose which server to send a request to:

- Random – select an app server at random.

- Round Robin – requests are directed to app servers in turn.

- Load-based algorithms – allocates based on current app server load.

|

| Scalable Web Application |

Aucun commentaire:

Enregistrer un commentaire